Steal This 3x3 Creative Testing Framework to Cut Costs Fast (and Find Winners Even Faster)

9 Tests, 1 Grid: The 3x3 That Outsmarts Endless A/Bs

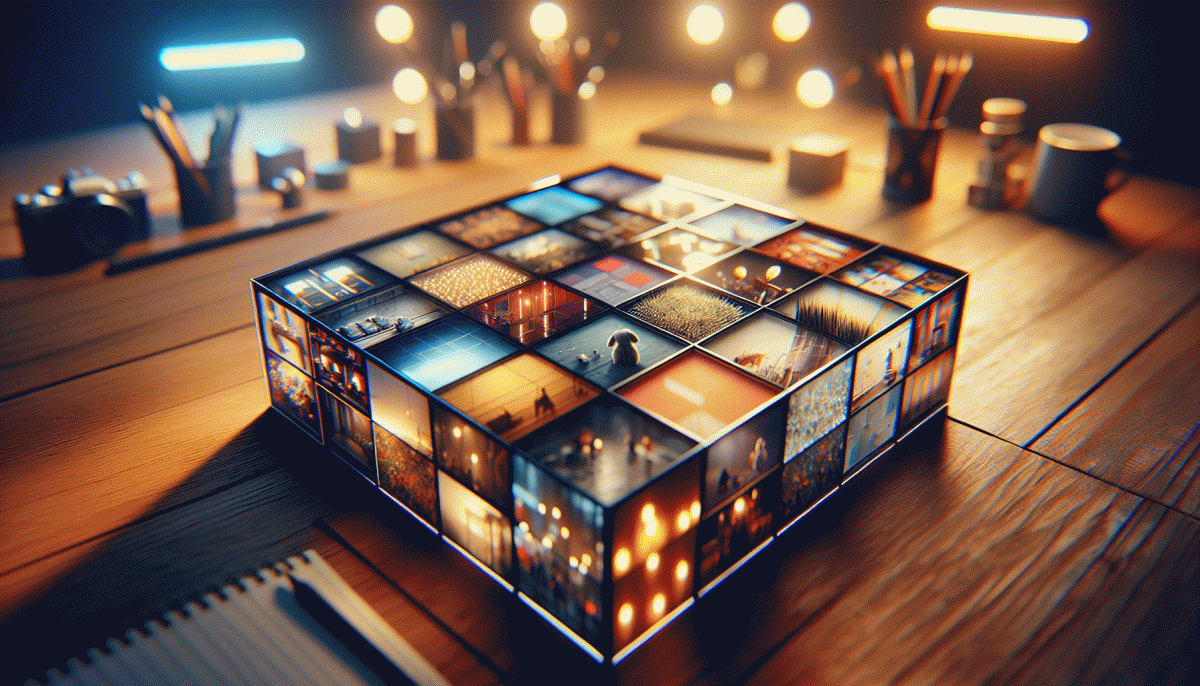

Stop running one-off A versus B marathons and start mapping smarter. Picture a tidy 3 by 3 board where each cell is a unique creative combo you can launch, measure, and decide on in a single short sprint. The grid forces discipline: three variants of one dimension crossed with three of another to expose clear winners, fast.

Choose your axes deliberately. One axis could be messaging tones – benefit-led, fear-of-missing-out, and playful. The other could be formats – single image, short video, and carousel. That yields nine distinct ads to test at once. Alternatively, try headline x CTA, or concept x audience segment. The goal is to isolate interactions rather than chase endless pairwise tweaks.

Run the grid with equal budget slices and tight timing: think 3 to 7 days depending on traffic. Track one primary metric and two guardrail KPIs so decisions stay razor focused. Set a minimum sample threshold per cell before declaring a loser. When a cell outperforms by a meaningful margin, pull spend from the bottom tier and reallocate to scale the momentum.

Do not treat outcomes as final law. Read both cell performance and main effects: maybe one headline shines across visuals, which means you can recombine that headline with new formats in the next microtest. The grid reveals synergies so you can rescue winning elements rather than tossing whole creatives into the trash heap.

Quick checklist to execute: Define hypotheses, pick two test dimensions with three variants each, split budget evenly, set stopping rules, then scale winners. Think of this as speed dating for ideas: you will find chemistry faster, cut wasted ad spend, and iterate toward the truly profitable combinations.

Plug-and-Play Setup: Build Your Matrix in 30 Minutes

Think of this as speed Lego for ads: grab three audiences, three creative angles, and three CTAs, then snap them into a tidy matrix. Use a consistent naming convention so analytics does not become detective work later — channel_campaign_audience_hook_CTA is enough.

Spend the first 10 minutes selecting assets: three headlines, three visuals or clips, and three short CTAs. Duplicate layers in your ad manager to avoid rebuilding. Keep each creative under 15 seconds and export versions labeled exactly like your matrix cells so swapping is instant.

Allocate a modest test budget and set equal weight to every cell for the first 48 hours so the data is fair. If you need an instant boost for visibility while the test learns, consider a targeted promo—buy YouTube views fast—but treat any paid lift as temporary while you identify true winners.

Measure with tight guardrails: CTR to surface attention, view-through or clicks for engagement, and CPA or ROAS for business signal. Pause cells that underperform your minimum threshold after the learning window and reallocate to top performers; this small habit cuts burn like a scalpel.

Final sprint: set up the matrix, upload named assets, schedule, and hit launch — all within 30 minutes. Review results at 48 and 96 hours, double down on winners, iterate hooks, and repeat. The faster you structure, the faster you find scalable creative.

The Signal Rules: Read Winners (and Losers) Without Overthinking

Think of signals like a traffic light for your creative tests: green for go, yellow for hold, red for kill — but powered by numbers, not feelings. Instead of overanalyzing every spike, boil each creative down to a couple of leading indicators (clicks, view-through rate, watch time) and one business-facing lagging indicator (adds, signups, purchases). The Signal Rules help you read winners and losers fast by forcing consistency across those views.

Rule 1 — Give it a short, sensible window: don't call a winner until a creative has either spent enough to matter (roughly 1k–2k impressions or your usual micro-conversion volume) or run 48–72 hours in stable traffic. Rule 2 — Look for agreement: a candidate winner should show uplift in at least two of three metrics (one can be a proxy). Rule 3 — Size the lift: set a practical bar (for many teams, ~15–25% lift vs. control is enough to justify scaling). These are heuristics to shortcut indecision, not gospel.

Rule 4 — Kill decisively: if a creative sits in the bottom quartile across metrics after the window, or delivers <50% of baseline conversion efficiency, cut it and reallocate. The faster you remove losers, the sooner winners get enough runway to compound. Don't waste top-of-funnel budget babysitting underperformers.

Operationally, tag variants, automate basic pass/fail flags, and keep a short log of calls (why you paused or scaled something). Over time you'll train your gut to match the rules, which is the whole point: stop overthinking edge cases and use a repeatable signal system to find winners faster and shave costs without losing your creative spark.

Spend Less, Learn More: Kill/Keep/Scale in Three Simple Calls

Think of the three calls as a fast war room for creativity: Kill, Keep, Scale. Run a 3x3 matrix (three creatives across three audiences = nine test cells) with identical micro budgets, then watch for signal in 48 to 72 hours. The point is triage, not romance—kill what is clearly wasting money, keep what shows promise, and scale what returns value. That ritual turns noisy experiments into clear decisions.

Use simple, measurable thresholds so calls are not arguments. Aim for at least 1k to 5k impressions or 50 to 100 clicks per cell before calling, then evaluate CTR, CVR, CPA and ROAS. If CTR is under 0.5% and CPA is above target, kill. If a variant sits in the top 30 percent for CTR with stable CPA, keep and iterate copy or landing tweaks. If a variant beats baseline CPA by 20 percent or clears your ROAS bar, scale it. If you need instant reach to compress timelines, consider order Twitter boosting to get impressions quickly.

Budget moves should be blunt and repeatable. After call one, reassign 60 to 80 percent of the freed budget to the keep pile, hold 20 percent for new explorers. At call two, increase winning budgets by 2x, then by 4x only if CPA and frequency remain healthy. Always set a CPA ceiling and a frequency cap when scaling to avoid audience fatigue. Repeat the loop at 48 hours, day five, and day ten to catch both short and longer funnel winners.

Make the calls like a barista pulling shots: timed, consistent, and documented. Log every kill and why it died, note the tweaks you applied to keeps, and record scaling guardrails. That discipline cuts spend, speeds learning, and surfaces real winners before you overcommit budget.

See It in the Wild: Turning Raw Ideas into Scroll-Stopping Instagram Ads

Start with three raw ideas and treat them like prototypes, not masterpieces. Choose one big benefit, one emotion, and one surprising mechanic as your ideas. For each idea create three executions — think a 6-second hook, a 15-second story, and a static hero image — so you can test concept versus execution without overinvesting in any single direction.

Frame each execution around a single variable to isolate what moves the needle. Change only the visual treatment in one set, only the headline in another, and only the CTA in the third. That controlled 3x3 grid lets you quickly spot whether the core idea or the creative execution is the winner, instead of guessing which element drove performance.

Run micro-tests with small budgets for 48–72 hours, then look for lift in CTR, CPM, and early conversion signals. Promote the best performing creative into a scaling cell while muting variations that underperform by a clear margin. Use the results to generate new ideas: flip the winning hook, swap the hero product, or speed up the pacing.

When you repeat this fast, disciplined loop you end up with a stack of scroll-stopping Instagram assets that were born from cheap experiments, not risky bets. That is how you turn rough concepts into ads people stop on and remember.

Aleksandr Dolgopolov, 06 December 2025