Steal This 3x3 Creative Testing Method to Slash Costs and Skyrocket Wins

Why 9 Beats 90: Learn Faster Without Burning Cash

Stop burning cash on tiny tweaks and noisy data. The 3x3 approach forces discipline: test three headlines against three visuals and you get nine clear combos instead of ninety muddled variants. Fewer combinations mean clearer signals, faster decisions, and a budget that actually buys learning instead of noise.

Here is why the small grid wins every time:

- 🚀 Speed: Nine combinations reach statistical relevance much faster than ninety, so you find winners before the budget evaporates.

- 🔥 Focus: With fewer moving parts you can pinpoint which element drives performance and iterate on it with intention.

- 🆓 Efficiency: Saving test spend lets you reinvest in audience refinement and creative polish rather than testing for testing sake.

Want to pair fast testing with real reach while you iterate? Check a vetted service like safe Instagram boosting service to amplify winners without blowing your budget.

Quick math to make it real: ninety variants at $10 each is $900; nine variants at $10 each is $90. Use the $810 saved to scale proven winners or buy quality audience data. Rule of thumb: kill variants that underperform by 20 percent after a control run, double down on top performers. Run, learn, repeat — the 3x3 is ruthless efficiency dressed as simplicity.

The 3x3 Breakdown: 3 Angles x 3 Variations = One Clear Winner

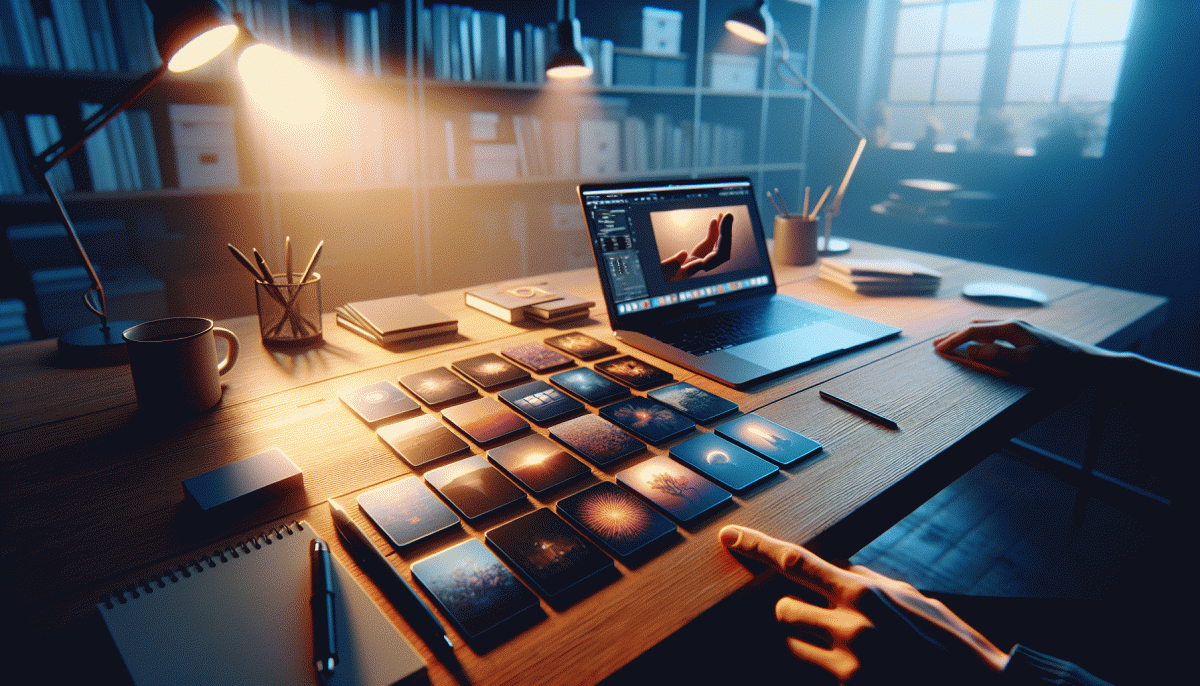

Think of the 3x3 as a tiny lab where you combine three strategic "angles" with three lightweight creative flips each. An angle is your story — problem, benefit, or social proof — and each variation tweaks one variable: headline tone, visual framing, or CTA wording. That gives you nine focused experiments that are cheap to run and easy to compare.

Start by writing crisp hypotheses: "If we lead with empathy (angle) and soften the CTA (variation A), conversions will rise." Use a clean naming convention that includes angle + variation (e.g., Benefit_V1). Split budget evenly at first, run to a minimum of conversions per cell (often 20–50) rather than days, and avoid major simultaneous audience changes so you measure creative, not traffic noise.

Decide winners with a clear metric — CPA, conversion rate, or ROAS — and a kill rule: if a cell trails the top performer by 20% after the minimum sample, pause it. Promote top-scoring creatives into scaled ad sets, then iterate: keep the winning angle, introduce three new variations, and repeat the 3x3 cycle. This keeps learning compound and fast.

To shave costs, reuse assets (swap captions or overlays instead of re-shooting), prioritize the cheapest-to-produce variation, and prune losers early. Within a few cycles you won’t just have a winner; you’ll have a proven creative playbook that slashes waste and multiplies scalable wins.

Set It Up in 30 Minutes: Your No-Fluff Launch Checklist

Ready to launch in half an hour? Cut the fluff: set three creatives, three audiences, and three budgets, and you've got a 3x3 matrix that sifts winners fast. This checklist gives you the nitty-gritty pre-flight so you're testing, not guessing — no fancy dashboards required, just discipline and a clear KPI.

Start with naming and tracking: use a consistent asset name like YYYYMMDD_platform_variation, append UTM parameters, and verify your pixel and conversion events fire across devices. Preload all nine creative combos, create audience buckets (broad, lookalike, retarget), and cap each cell to the same daily spend so tests are fair. Prepare two backup messages per creative and schedule creative refreshes every 7–10 days.

- 🆓 Creative: 3 headlines + 3 visuals ready in correct specs; optimize file sizes so ads render instantly.

- ⚙️ Tracking: Pixel, server events, and UTMs verified; set automated reports for hour 24, day 3, and day 7.

- 🚀 Launch: Even split across nine cells for the first 72 hours; flag bottom performers and seed budget to the top 10–20%.

Finish with guardrails: pick one primary KPI (CPA or ROAS), set a stop-loss to kill runaway spend, and define a scale path (e.g., double winners in 20–30% increments). Stick to the plan, iterate on the handful of clear signals, and you'll shave wasted spend while increasing wins — fast, clean, and a little smug.

9 Ads, 1 Budget: Smart Spend That Still Finds Signals

Running nine ads on one lean budget is not reckless, it is disciplined experimentation. Treat each creative like a lab subject: give every ad a tiny, equal allocation for the first wave, pick one primary metric (CPA, CTR, or ROAS), and run that micro-test long enough to see a signal rather than noise. Small spends force clarity — you will quickly learn which creatives have promise without burning cash on vanity winners.

Group your creatives into clear buckets and name them so you can read results at a glance. Start with three concept families and three variants each, then let performance decide the survivors. If you need a quick way to amplify early winners for social proof, check out cheap Facebook boosting service to gather initial engagement without blowing the test budget.

Set firm kill rules: a variant that misses the baseline metric by X% after Y impressions gets cut. Reallocate the freed micro-budget proportionally to the top performers and run a second round. Think in rounds of 3–5 days for learning windows, not forever. This fast iterate-and-prune loop turns nine thin hypotheses into one confident winner.

Don’t overcomplicate optimization. Keep headlines, visuals, and CTAs modular so you can mix and match winners. Track frequency and audience overlap to avoid cannibalization, and prefer simple analytics: a single dashboard column for the chosen KPI will keep decisions sharp and fast.

Ready-to-run checklist: 1) Split 9 ads into 3x3 groups, 2) allocate equal micro-budgets and a single KPI, 3) kill losers on a fixed rule and double down on winners. Small bets + strict rules = fewer wasted dollars and more scalable winners.

Avoid the Money Pits: Common Testing Traps (and Easy Fixes)

Testing is supposed to save money, not bury it. Yet teams routinely pour budget into experiments that never learn: overfragmented variants, premature calls because of a lucky day, or letting creative fatigue eat conversion. The antidote is simple discipline—a tight framework that forces you to test smarter, not louder, so your 3x3 setup delivers clear winners without the budget hemorrhage.

- 🐢 Sample Size: Running tiny cells feels fast but yields noise; increase traffic per cell or combine until results are reliable.

- 💥 False Positives: Stopping on first uplift is a trap; require consistency across days and cohorts before declaring victory.

- 🤖 Creative Bloat: More ideas does not equal more wins; too many creatives dilute learnings and spike costs.

Fixes you can implement this afternoon: pick one clear hypothesis per 3x3 grid, limit creatives to three variants, and predefine a minimum detectable effect and test duration. Allocate traffic so each cell gets enough impressions, and automate pause rules for losers. If a variant looks flaky, rerun with a tightened audience rather than throwing more spend at it.

Treat tests like targeted investments: limit exposure, insist on repeatable signals, and scale only the clean winners. Do that and you will cut waste, accelerate learning, and turn experimental spend into predictable growth.

Aleksandr Dolgopolov, 19 December 2025