This 3x3 Creative Testing Framework Cuts Waste and Prints Winners—Steal It

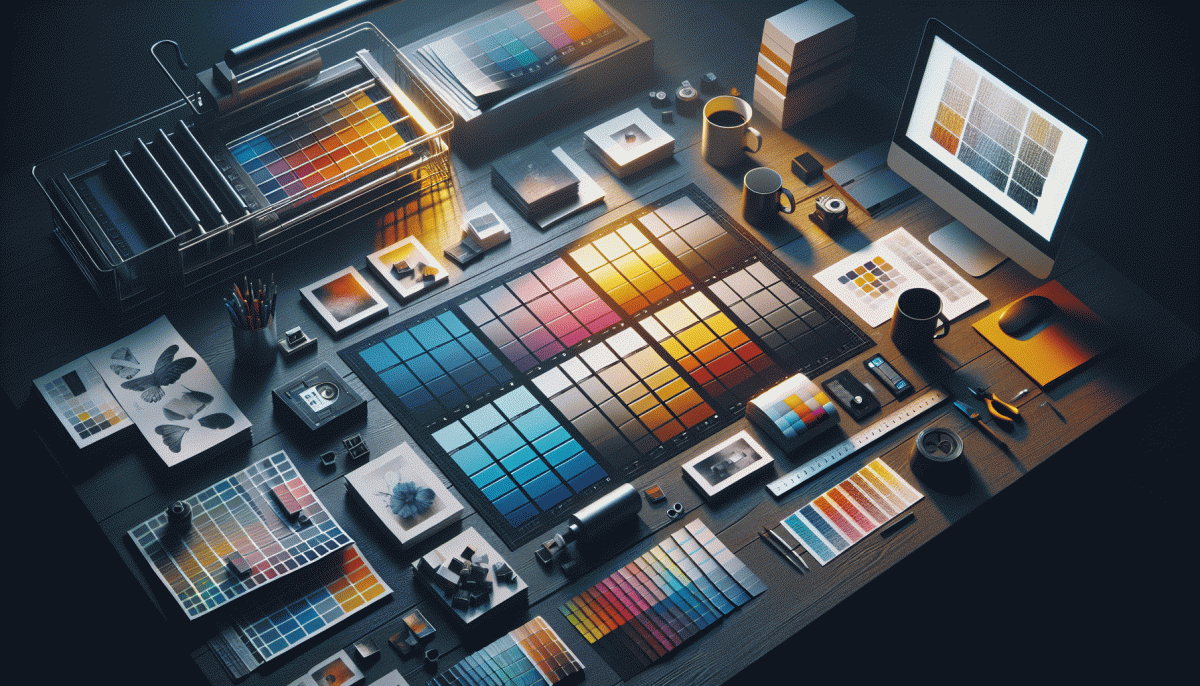

Meet the Grid: How the 3 Hooks x 3 Visuals Formula Works

Think of the grid as a scientific playground: three distinct hooks (the promise, the pain, the curiosity bait) down the left and three visual strategies (product close-up, lifestyle scene, motion-driven graphic) across the top. Combine them and you get nine real-world creatives that reveal which narrative + look actually moves people. It's elegant because it forces variety without chaos—every creative is testable, comparable, and repeatable.

Pick hooks that are meaningfully different, not synonyms. Choose visuals that change composition, framing, or motion — not just color tints. Launch all nine combos simultaneously, split budget evenly, and randomize audience slices. This removes timing bias and accelerates learning. Keep placements consistent, or deliberately test one placement at a time if placement is your hypothesis.

Run the grid long enough to collect stable early signals: CTR, view-through rate, and micro-conversions (add-to-cart, sign-ups) are fast indicators; downstream conversions confirm winners. Set pragmatic stop-loss rules: if a cell is underperforming versus the median by X% after Y impressions, pause it and reallocate. Don't chase noise — prioritize consistent patterns across multiple metrics.

When a winner emerges, scale it horizontally: increase spend, expand targeting, and create micro-variants (copy tweaks, crop changes, sound swaps). Archive all nine results with clear labels so future tests start from real data, not gut. Repeat the 3x3 with a fresh hook or visual set every cycle and you'll steadily shrink waste and print repeatable winners.

Setup in 30 Minutes: The Inputs, Budget, and Guardrails

Start by gathering three simple inputs: the creative assets you already have (video, image, headline), the audience segments you want to stress-test, and one clear metric that declares victory. Keep each input lean: three creatives, three audiences, one KPI. That's enough signal to know what's working without drowning in noise.

Split your budget like a surgeon, not a gambler. Reserve about 60% for the full 3x3 matrix (so every creative meets every audience), 25% for a small follow-up ramp on early winners, and 15% as a holdback for validation. With modest numbers per cell you get directional clarity fast—this isn't about statistical nirvana, it's about repeatable winners.

Put guardrails in place before you hit launch: minimum impressions per cell, a runtime floor (usually 48–72 hours), and a stop-loss CPA. If a cell clears the runtime without the minimum impressions, extend; if it blows past the CPA threshold, kill it. These simple rules save days and ad dollars while keeping the experiment honest.

Make the tech and process frictionless. Use a consistent naming convention, a shared spreadsheet or dashboard with live metrics, and a template brief for creative swaps so anyone can plug in a new variant in five minutes. Automate tagging so you can slice results by creative element, audience, and placement without hunting through messy reports.

Launch with a 30-minute timer: upload, name, budget, and start. Schedule two decision checkpoints—one quick check at 72 hours, one validation window at scale. Small, disciplined experiments turn creative guesswork into a repeatable machine that prints winners, faster.

Zero-Fluff Metrics: What to Track, What to Ignore, When to Decide

If creative testing is a science, then metrics are the microscope. Use only indicators that move the business needle: conversions, cost per acquisition, and the micro conversions that predict them. Everything else is background noise. Treat each of the nine test cells as a tiny funnel and pick one primary metric you will not argue about.

Primary: conversions or revenue per visit. Efficiency: CPA or ROAS. Signal: CTR or watch time when they reliably predict conversion. Track those three only. Keep reporting lean so decisions are fast and repeatable. Add a supporting metric only if it directly explains a win or a loss.

Ignore vanity signals like raw impressions, follower counts, and aggregated engagement that do not correlate to purchase or lead. Likes and saves are decoration, not proof. Also ignore small, noisy swings under low volume; a 10 percent CTR bump on 200 impressions is a mirage. If a metric does not change an action you will take, stop monitoring it.

Decide on a cadence and a minimum sample before you act: set a stop rule (for example, 2,000 impressions or 20 conversions per creative) and a lift threshold (for example, 15 to 20 percent relative improvement or a meaningful CPA drop). Pause consistent losers, scale winners incrementally, and rerun fresh variants from the 3x3 matrix. Discipline in metrics equals faster winners and less wasted budget.

9 Quick Swaps: Hooks, Visuals, and CTAs That Stack the Odds

Think of nine tiny experiments instead of one huge bet: swap one hook, one visual, and one CTA across three variants and you get fast signals without blowing the budget. The trick is to make each swap deliberate—change only one psychological lever at a time so winners reveal why they work, not just that they did.

Swap hooks by moving from curiosity to clarity: test a mystery opener, a bold benefit statement, and a skeptical objection that you immediately answer. Try shifting from "Did you know..." to "Stop losing X" to "Most people think Y, here is what actually works." Each version targets a different motive—novelty, pain avoidance, or credibility—so you can see which wins for your audience.

For visuals, trade highly staged hero shots for candid product-in-use, then try a motion-first short loop. Also experiment with color temperature: warm tones feel cozy and trustworthy, cool tones feel modern and aspirational. Small visual pivots often magnify hook effects, so the grid will expose combinations that sing together.

CTAs are micro-psychology: test action verbs versus micro-commits and urgency. Swap "Buy now" for "Try risk-free" and "See how it works" to nudge different conversion stages. If you want a quick reference for platform-oriented creative ideas, check TT boosting site and adapt the language to your funnel.

Run each cell for a short window, watch relative lift not absolute volume, and promote only when multiple cells corroborate. That disciplined chopping and testing keeps spend tight and winners repeatable—fast.

From Test to Scale: Rolling Your Winners into Meta Ads Without Mayhem

Winners from the 3x3 lab are not golden tickets, they are blueprints for repeatable lift. When moving a creative into Meta do not blast it across every audience. Treat the rollout like science: isolate variables, control spend, and label everything so you know which creative did what. Start small and keep the experimental spirit.

Launch each winner into 3 to 5 tightly defined audiences with separate ad sets per creative. Run for 48 to 72 hours on learning budgets, then read CTR, CVR, and CPA as your triage metrics. Use dayparting and placements to see where the creative sings, and if signal dries up pause and iterate instead of pouring more budget into noise.

For hands free scale support and smart automation consider external options like order Instagram boosting that can seed fast reach without killing algorithmic learning. Use third party seeding to accelerate awareness, but keep final conversion testing and attribution inside your own account for true measurement and creative credit.

When increasing budgets prefer step bumps of 20 to 30 percent every 48 hours and use CBO with capped ad set limits for control. Watch frequency and creative level ROAS, clone winning combos, run scaled holdouts, and document every step in a simple tracker so winners remain winners at scale.

Aleksandr Dolgopolov, 05 January 2026