The 3x3 Creative Testing Framework That Slashes Costs and Gives You Hours Back

Your A/B Tests Are Lying—Here's How 3x3 Finds Winners Faster

A/B tests lie when you let noise masquerade as signal. Small sample sizes, novelty boosts, and the habit of launching a dozen variants at once turn every winner into a coin flip. Teams stop tests early because the dashboard whispers 'stat sig'—but that whisper is often a mirage that costs time, budget, and confidence.

Enter the 3x3 method: three creative variants tested across three audience lenses. Instead of splitting traffic into dozens of tiny buckets, you run nine focused cells that give you cross-validated winners and faster learning loops. The matrix design forces consistency: a creative that wins across multiple cells is a real performer, not a one-night stand.

Practical setup is simple: pick three big levers—visual, headline, CTA—and three audience types—cold, warm, high-intent. Rotate every 48–72 hours, apply basic pre-test sanity checks, and keep each cell sized to hit minimum power. Only promote a winner that beats control in at least two cells; that rule slashes false positives and prevents premature calls.

To operationalize it, automate rotation, predefine minimum samples and runtime, and use a compact dashboard that flags cross-cell consistency over single-day spikes. The payoff is immediate: fewer wasted spins, clearer creative direction, faster decisions, and actual hours back for your team to make better stuff—not babysit flaky tests.

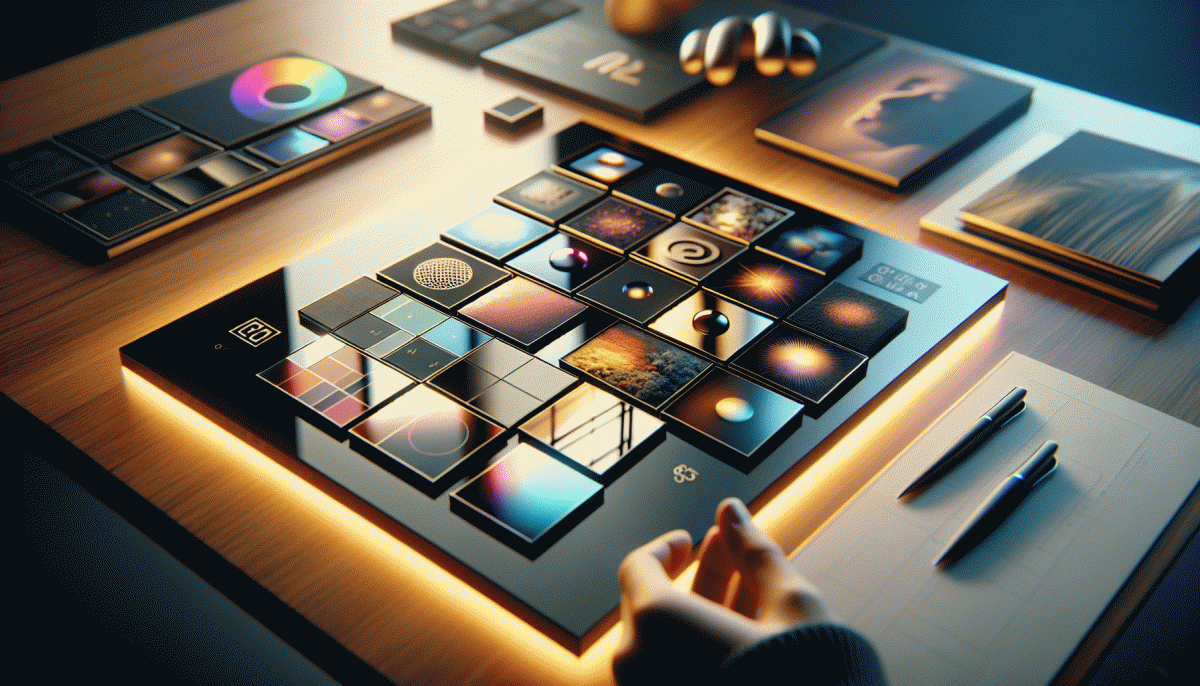

The Grid Explained: 3 Messages x 3 Visuals = 9 Rapid Insights

Think of the grid as a creative pressure test that finds winners fast. Pair three distinct messages with three distinct visual styles to get nine clear data points in the time that one random spray test would take. The goal is speed and clarity: identify what idea lands and which design carries it without wasting creative hours or ad spend.

Start by choosing three messages that each sell a different angle — benefit, proof, emotion. Match those to three visual treatments that vary in composition, color, or format. Run all nine combinations simultaneously under the same targeting and budget, then collect engagement, click, and conversion signals after a short burst.

- 🚀 Message: Test angle, tone, and offer variation quickly

- 🔥 Visual: Swap layout, color palette, or hero asset

- 🆓 Metric: Choose one primary KPI and two supporting metrics

When results arrive, prune aggressively: keep the top combos, iterate on the partial winners, and retire the rest. Repeat the grid with refined messages or visuals to compound learning. The result is fewer wasted concepts, faster creative cycles, and hours reclaimed for strategy and big ideas.

Set Up in 30 Minutes: Budgets, Variations, and Guardrails

Start by treating the first 30 minutes like a launch checklist rather than an art project. Pick a total daily budget you are comfortable losing while learning, then divide it evenly across nine cells so each combo gets an honest chance. For example, a $90 daily budget becomes $10 per cell; set a minimum test window of 3 to 5 days or a spend floor of roughly $30 per cell before making any cuts. That simple math prevents premature hero worship of flukes.

Design your 3x3 grid next: 3 creatives crossed with 3 audiences. Name every ad with a short slug that shows creative number, audience, and test batch, like C2_A1_Batch5. Use templated creative variations that swap a single element at a time — headline, image, or CTA — so you actually learn what moves metrics. If you need a quick resource for platform placement or audience options, check get YouTube boost online for inspiration and fast configuration patterns.

Guardrails are the safety net that lets experiments run without chaos. Set hard caps on frequency and CPA, and use stop rules: pause any cell that underperforms the median by a clear margin after the spend floor is hit. Enforce creative freshness by rotating out creatives older than two weeks, and lock bids or budgets so an early runaway winner does not consume the test and hide better long term performers.

Finish with automation and a short dashboard. Duplicate the winning cell into a refinement batch, scale budgets in 20 to 30 percent increments, and archive losers. Track three KPIs first — CTR, cost per action, and conversion rate — then iterate. With a checklist and naming conventions, the whole setup is a half hour sprint that buys you hours back in decision making.

Metrics That Matter: Cost per Learn, Win Rate, and When to Kill

Testing is not about pretty ads, it is about readable signals. The three metrics that save time and cash are cost per learn, win rate, and explicit kill rules. Treat them like a triage team: one counts currency spent to learn, one tracks how often ideas actually win, and one decides when to stop bleeding effort on duds.

Cost per learn is simple math with big consequences. Take total test spend and divide by the number of reliable learnings, defined as statistically or practically significant shifts you can act on. Set a cap: if the cost per learn approaches the expected value of the insight or a meaningful fraction of customer LTV, you are paying to be unsure, not smarter.

Win rate is the percent of variants that beat the control and actually move the needle. A 5 percent win rate is probably noise hunting; 20 to 30 percent is healthy and signals strong creative hypotheses. Always read uplift size too: a smaller win with high confidence can be more valuable than a fluke big lift that never repeats.

Make kill calls with rules that combine both metrics and business context. Practical triggers look like this:

- 🆓 Low Signal: After planned sample size, if confidence is under threshold and uplift is negligible, kill the variant and redeploy budget.

- 🐢 Slow Win Rate: If a cell spends twice the expected cost per learn without a win, reassign creative resources and pivot hypotheses.

- 💥 Cost Burn: Stop tests where projected cost per learn exceeds 10 percent of target CPA or the insight would not change a million dollar decision.

Operationalize these metrics: set CPLearn budgets per 3x3 cell, track rolling win rate on a dashboard, and automate kill rules in your test runner. The result is less waste, faster learning loops, and literal hours back to do the creative work that actually scales.

Scale Like a Pro: Promote Winners, Fix Losers, Repeat

Think of your 3x3 grid as a mini factory: each creative is a product line, and your job is less glamorous than creative director — more like efficient chef. Spot a winner, pour resources on it fast; label a loser, decide whether to tweak, repackage, or retire. The faster you act, the more hours you get back and the less budget leaks into experiments that already betrayed you.

Set simple, defensible thresholds: if a creative beats baseline CTR or CPA by +20% over 48–72 hours, treat it as a winner; if it underperforms by -15% after the same window, earmark it for a fix. Use confidence intervals for real-scale accounts — significance matters less at micro-scale but pacing and CPM shifts don't lie.

Fixing losers isn't a shame game. Swap the hook, tweak the first 3 seconds, change the CTA color, or test voiceover vs. captions. Duplicate a near-miss and iterate — small creative swaps inside the 3x3 cells often flip duds into stars. Where possible, automate rules to shift budgets and create duplicates so your team stops babysitting spreadsheets and starts shipping better ads.

- 🚀 Scale: Double spend on the top performer, keep creatives fresh for 7–10 days.

- 🤖 Automate: Create rules to boost bids after X conversions and pause after Y poor CPAs.

- ⚙️ Triage: Pause the bottom 30%, rework one element, then re-enter the grid.

Aleksandr Dolgopolov, 07 December 2025